Privacy in the Age of Facial Recognition

8-Jul | Written by Alexander Rau

As a security and privacy professional, it is hard not to have heard of the controversial facial recognition app Clearview AI. It made the news last year when the story about it broke in the New York Times. It was just a matter of time before someone combined Internet/social media crawling technologies and artificial intelligence to come up with an app that would allow for creating a facial recognition database of pictures/faces and available social media data about those individuals. It is not surprising that law enforcement seems to appreciate the ability to identify and track individuals online with such an app.

On June 10th, 2020 Thomas Daigle published an article on CBC.ca in which he requested the data and pictures that Clearview AI had stored of him. Daigle based his request on Clearview’s privacy policy which gives individuals the ability to make a request for copies. According to Clearview AI's privacy policy, you have “The right to access - You have the right to request that Clearview AI provides you with copies of your personal data”.

After reading about Clearview AI and the articles that followed, my curiosity was piqued and I wanted to investigate further. I decided to make a request for my data/pictures stored by Clearview AI with the intent to:

See what, if any, data/pictures they have of me

Find out where they got the information from – according to Thomas Daigle’s article the results include links to where the pictures were obtained from by Clearview AI

And, confirm whether any of the websites that allowed my pictures/data to be obtained by Clearview AI could be deleted, locked down, or dealt with in any other manner so my pictures/data would no longer be accessible publicly

To begin the process, I had my wife take my headshot as you need to send a headshot of yourself to Clearview AI in order for them to search their database for your image. Once the photo was taken, I sent Clearview a short and simple email:

Figure 1 – Email to Clearview AI to request my pictures and data

After 26 hours and 19 minutes, I received a response from the Clearview Privacy team:

Figure 2 – Email response from Clearview AI Privacy Team

With curiosity, I opened the PDF:

Figure 3 – Attachment with search results

I wasn’t sure if I was to be happy or disappointed with the results they sent me. One picture from one source?!?!

What does this mean? Well, it could mean that their AI only crawled one picture from one publicly available web page, yet what about my public LinkedIn profile for example, it uses the same picture that they found on this other site. Now I have to admit, I am not the super social media user with a heavy Twitter, Instagram, or TikTok presence, but I do use the usual suspects like Facebook and LinkedIn fairly frequently.

Of the questions I wanted to have answered from my own investigation, I really only received an answer to question #1. This also makes me question whether they really provided me with ALL the information they have on me… but let’s not go there just yet, maybe that needs to be addressed in a whole other article.

After receiving limited answers to my questions, I started thinking, why not do a reverse picture search myself with the headshot I provided to Clearview? Google allows for this and you can find the explanation for how to do a reverse picture search here.

Now Google’s search algorithms are not programmed for facial recognition, yet rather analyze a picture to try to ascertain what the picture is depicting. In my case, my headshot resulted in a google reverse image search based on the term “active shirt”, which Google assumed my headshot was about. As such, the picture search results did not find any Internet sources with my picture publicly available:

Figure 4 – Google Reverse Image Search results

Since Google’s search and Clearview AI’s results differ for obvious reasons, the results really can’t be compared. As such, I decided to take it a step further, and went on to perform a reverse image search with the picture Clearview’s facial recognition AI found of me. I wanted to see if Google could turn up additional publicly available Internet sources of this specific picture.

Interestingly enough, when I uploaded my professional headshot to Google its search algorithm thought I was looking for the term ‘gentleman’. This time Google did find matching images on the Internet, and even found the picture that Clearview AI had in its database. In addition to this photo, it found four more on the same website as well as seven different impressions from LinkedIn.

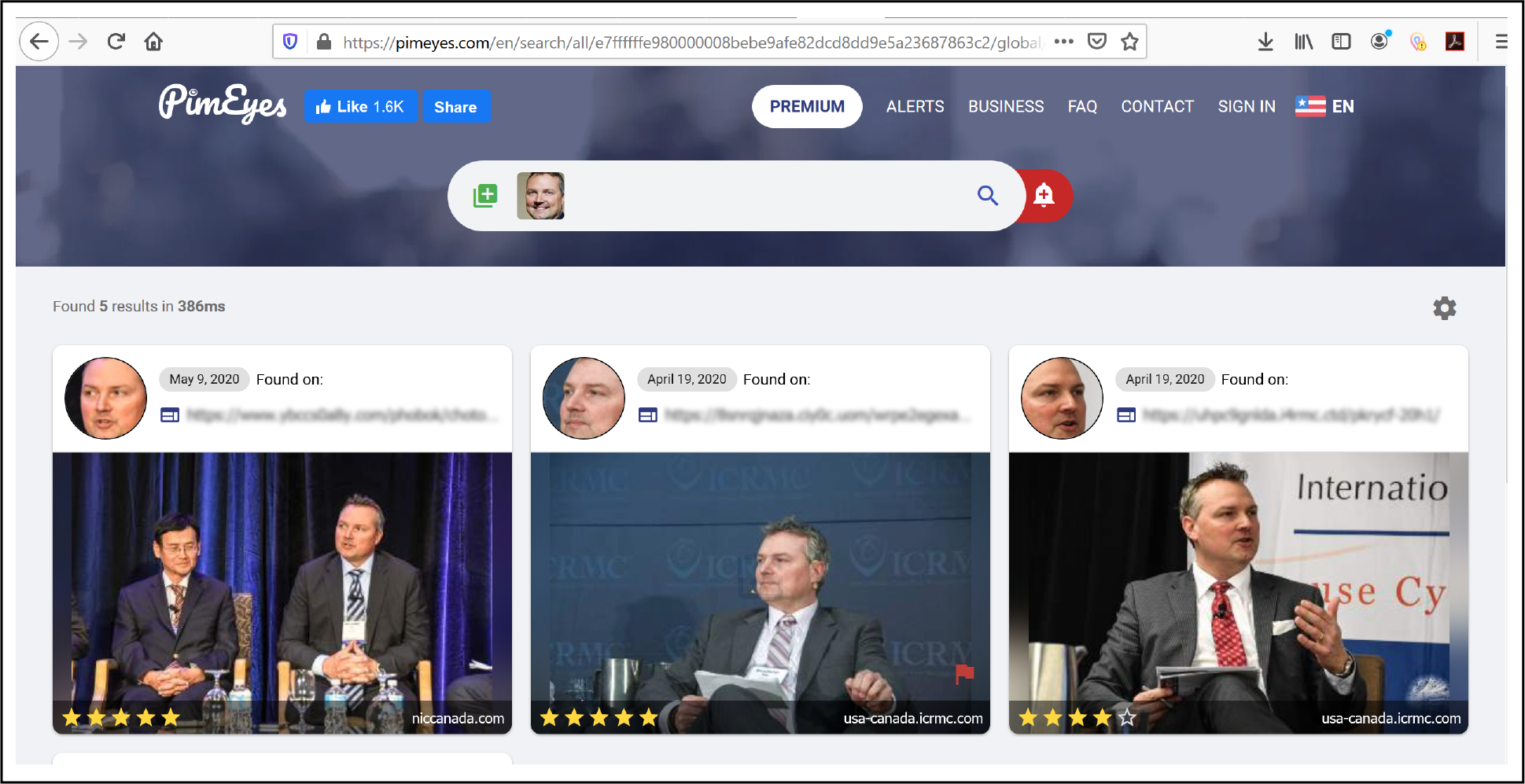

I decided to take it a step further and used some publicly available facial recognition search websites such as https://pimeyes.com and

https://betaface.com. PimEyes was able to find additional pictures that neither Clearview’s AI nor Google’s reverse image search found.

Figure 5 – PimEyes Image Search results

In conclusion, it was a worthwhile exercise to ask Clearview AI for my data and pictures although it did not bring the results I had hoped for (or did it!?). While I may not have received all the answers I wanted, I feel it doesn’t hurt to perform image searches on the Internet to see what is out there and possibly what we are not aware of. In times of privacy and facial recognition, one should be especially careful of what is online that could be used/accessed freely and openly to create databases for one reason or another. It might be easier when it comes to pictures someone posts intentionally on social media platforms such as Facebook and LinkedIn, as those sites can either be locked down with security features (Facebook) or should be semi public so one can be found (LinkedIn).

What about pictures that have been taken of someone and posted to the Internet with or without someone’s consent? Checking for pictures periodically will allow you to identify and possibly remove images that were posted unknowingly or you feel should not be out there. This is also important when it comes to the safety and security of our children. While there are policies in place to try and protect our children, who really knows whether pictures of them from school functions, sporting events, or other activities have been shared, knowingly or unknowingly. To be on the safe side, I will definitely be performing a search myself to see what pictures can be found of my children, if any, just to be sure.

Something to note and an interesting observation about this exercise, was that there was no validation of my identity or the picture I sent to Clearview AI. I have to admit, it fleetingly crossed my mind to send a picture of someone else to see if I would receive a response with their info, however, I did not want to rock that boat and get into hot water. It is definitely something to ponder over though.

One final thought about facial recognition, as I wrote this article and did some research, I came across an article Easily Stop Facial Recognition (German only). It mentions practices such as image smoothing and pixilation before uploading, to avoid or at least make it more difficult for facial recognition AIs such as Clearview’s to recognize one’s picture. Again though, this would only allow control over the pictures uploaded personally and not the ones uploaded by others.

Paranoia or not, Aldous Huxley (“Brave New World”) and George Orwell (“1984”) send their regards as we definitely live in interesting times.

Just as this article was about to be published, news broke that Clearview AI stops offering facial recognition software in Canada amid privacy probe. With the RCMP being the last law enforcement agency in Canada to suspend the use of Clearview AI, Clearview also announced it would cease operations in Canada. Clearview also mentioned Canadians can now opt out of the company’s search results although it remains unclear what exactly this mean and how to do so. I also believe that this does not mean that someone’s pictures might still be in Clearview’s database. This is one of the most controversial privacy related issues with Clearview AI’s application. Clearview does allow the deletion of records of individuals from certain jurisdictions such as California, the European Union, the UK and Switzerland but the site has not been updated to include Canadian citizens.

Figure 6 – Clearview’s Privacy Request Forms Page

It hopefully will just be a matter of time before Clearview updates its site to allow Canadians to opt out and request deletion of their records in the company’s database.

In closing, a hopeful quote by Ann Cavoukian, the former privacy commissioner of the province of Ontario, from the CBC article: “This shows that we can indeed make a difference and stop privacy-invasive practices”. We just have to be aware of such privacy invasive practices and work together to address them.

Additional cybersecurity related articles can be found on my Cybersecurity BLOG.

Disclaimers:

All views expressed in this article are my own and do not represent the opinions of any entity with which I have been, am now, or will be affiliated.

The information provided in this article is for educational purposes only and provided “as is”. By no means is the information provided intended to prevent privacy and data breaches from occurring. As with all matters please seek professional guidance to address the unique privacy and cybersecurity risks for yourself and your organization.